Zero-Shot Voice Style Transfer with Only Autoencoder Loss

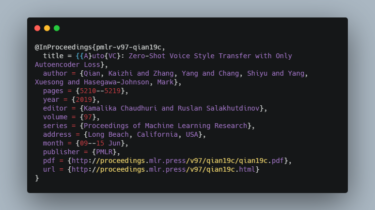

AutoVC

This is an unofficial implementation of AutoVC based on the official one. The D-Vector and vocoder are from yistlin/dvector and yistLin/universal-vocoder respectively.

This implementation supports torch.jit, so the full model can be loaded with simply one line:

model = torch.jit.load(model_path)

Pre-trained models are available here.

Preprocessing

python preprocess.py [--seg_len seg] [--n_workers workers]

- data_dir: The directory of speakers.

- save_dir: The directory to save the processed files.

- encoder_path: The path of pre-trained D-Vector.

- seg: The length of segments for training.

- workers: The number of workers for preprocessing.

Training

python train.py [--n_steps steps] [--save_steps save] [--log_steps log] [--batch_size batch] [--seg_len seg]

- config_path: The config file of model hyperparameters.

- data_dir: The directory of preprocessed data.