Understand the Impact of Learning Rate on Neural Network Performance

Last Updated on September 12, 2020

Deep learning neural networks are trained using the stochastic gradient descent optimization algorithm.

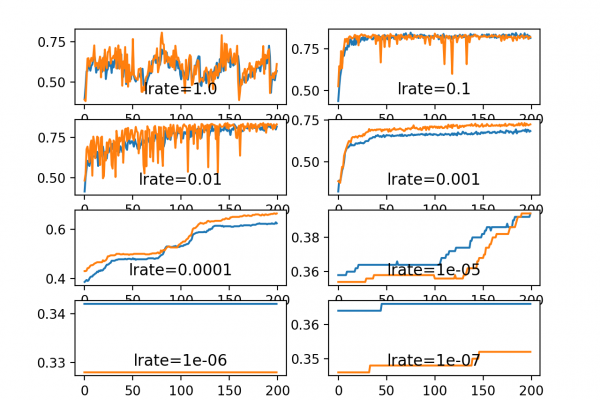

The learning rate is a hyperparameter that controls how much to change the model in response to the estimated error each time the model weights are updated. Choosing the learning rate is challenging as a value too small may result in a long training process that could get stuck, whereas a value too large may result in learning a sub-optimal set of weights too fast or an unstable training process.

The learning rate may be the most important hyperparameter when configuring your neural network. Therefore it is vital to know how to investigate the effects of the learning rate on model performance and to build an intuition about the dynamics of the learning rate on model behavior.

In this tutorial, you will discover the effects of the learning rate, learning rate schedules, and adaptive learning rates on model performance.

After completing this tutorial, you will know:

- How large learning rates result in unstable training and tiny rates result in a failure to train.

- Momentum can accelerate training and learning rate schedules can help to converge the optimization process.

- Adaptive

To finish reading, please visit source site