Undersampling Algorithms for Imbalanced Classification

Last Updated on January 20, 2020

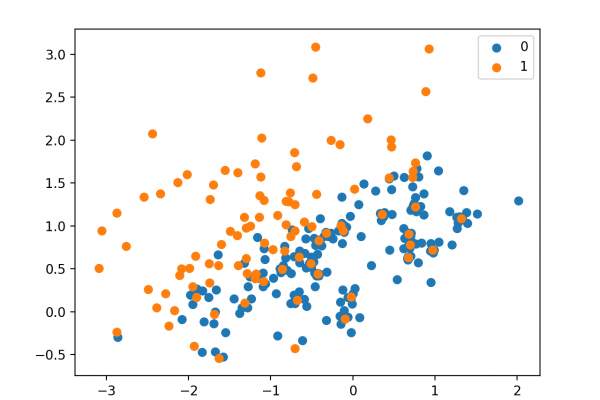

Resampling methods are designed to change the composition of a training dataset for an imbalanced classification task.

Most of the attention of resampling methods for imbalanced classification is put on oversampling the minority class. Nevertheless, a suite of techniques has been developed for undersampling the majority class that can be used in conjunction with effective oversampling methods.

There are many different types of undersampling techniques, although most can be grouped into those that select examples to keep in the transformed dataset, those that select examples to delete, and hybrids that combine both types of methods.

In this tutorial, you will discover undersampling methods for imbalanced classification.

After completing this tutorial, you will know:

- How to use the Near-Miss and Condensed Nearest Neighbor Rule methods that select examples to keep from the majority class.

- How to use Tomek Links and the Edited Nearest Neighbors Rule methods that select examples to delete from the majority class.

- How to use One-Sided Selection and the Neighborhood Cleaning Rule that combine methods for choosing examples to keep and delete from the majority class.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step

To finish reading, please visit source site