UmlsBERT: Augmenting Contextual Embeddings with a Clinical Metathesaurus

UmlsBERT

UmlsBERT: Clinical Domain Knowledge Augmentation of Contextual Embeddings Using the Unified Medical Language System Metathesaurus

General info

This is the code that was used of the paper : UmlsBERT: Augmenting Contextual Embeddings with a Clinical Metathesaurus (NAACL 2021).

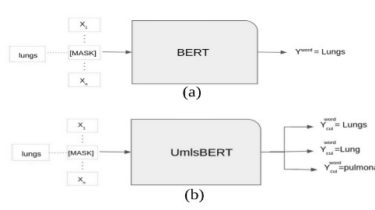

In this work, we introduced UmlsBERT, a contextual embedding model capable of integrating domain knowledge during pre-training. It was trained on biomedical corpora and uses the Unified Medical Language System (UMLS) clinical metathesaurus in two ways:

- We proposed a new multi-label loss function for the pre-training of the Masked Language Modelling (Masked LM) task of UmlsBERT that considers the connections between medical words using the CUI attribute of UMLS.

- We introduced a semantic group embedding that enriches the input embeddings process of UmlsBERT by forcing