Transition-based Graph Decoder for Neural Machine Translation

Abstract

While a number of works showed gains from incorporating source-side symbolic syntactic and semantic structure into neural machine translation (NMT), much fewer works addressed the decoding of such structure.

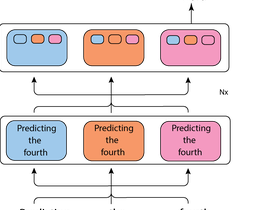

We propose a general Transformer-based approach for tree and graph decoding based on generating a sequence of transitions, inspired by a similar approach that uses RNNs by Dyer et al. (2016).

Experiments with using the proposed decoder with Universal Dependencies syntax on English-German, German-English and English-Russian show improved performance over the standard Transformer decoder, as well as over ablated versions