Training RNNs as Fast as CNNs

News

SRU++, a new SRU variant, is released. [tech report] [blog]

The experimental code and SRU++ implementation are available on the dev branch which will be merged into master later.

About

SRU is a recurrent unit that can run over 10 times faster than cuDNN LSTM, without loss of accuracy tested on many tasks.

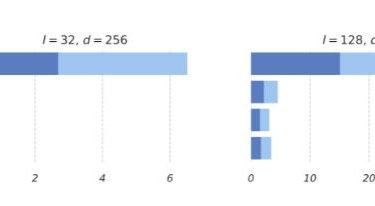

Average processing time of LSTM, conv2d and SRU, tested on GTX 1070

For example, the figure above presents the processing time of a single mini-batch of 32 samples. SRU achieves 10 to 16 times speed-up compared to LSTM, and operates as fast as (or faster than) word-level convolution using conv2d.