Towards Data-Efficient Detection Transformers

By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao

This repository is an official implementation of DE-CondDETR and DELA-CondDETR in the paper Towards Data-Efficient Detection Transformers.

For the implementation of DE-DETR and DELA-DETR, please refer to DE-DETRs.

Introduction

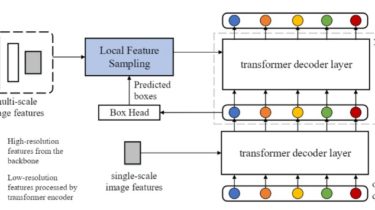

TL; DR. We identify the data-hungry issue of existing detection transformers and alleviate it by simply alternating how key and value sequences are constructed in the cross-attention layer, with minimum modifications to the original models. Besides, we introduce a simple yet effective label augmentation method to provide richer supervision and improve data efficiency.

Abstract. Detection Transformers have achieved competitive performance on the sample-rich COCO dataset. However, we show most of them suffer from significant performance drops on small-size