Tour of Evaluation Metrics for Imbalanced Classification

Last Updated on January 14, 2020

A classifier is only as good as the metric used to evaluate it.

If you choose the wrong metric to evaluate your models, you are likely to choose a poor model, or in the worst case, be misled about the expected performance of your model.

Choosing an appropriate metric is challenging generally in applied machine learning, but is particularly difficult for imbalanced classification problems. Firstly, because most of the standard metrics that are widely used assume a balanced class distribution, and because typically not all classes, and therefore, not all prediction errors, are equal for imbalanced classification.

In this tutorial, you will discover metrics that you can use for imbalanced classification.

After completing this tutorial, you will know:

- About the challenge of choosing metrics for classification, and how it is particularly difficult when there is a skewed class distribution.

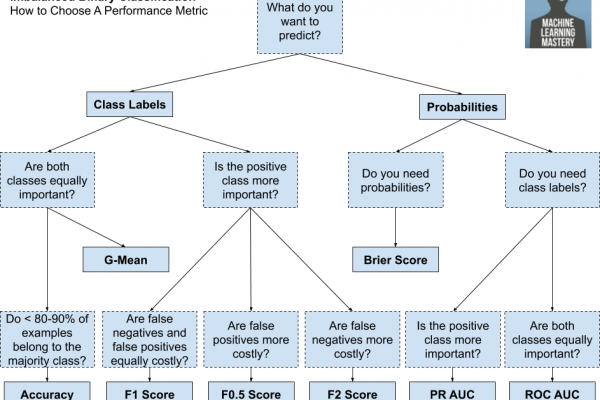

- How there are three main types of metrics for evaluating classifier models, referred to as rank, threshold, and probability.

- How to choose a metric for imbalanced classification if you don’t know where to start.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source

To finish reading, please visit source site