This Looks Like That, Because … Explaining Prototypes for Interpretable Image Recognition

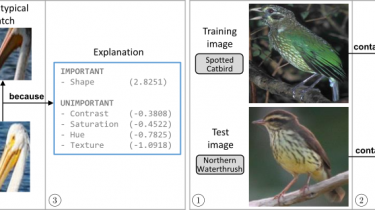

Image recognition with prototypes is considered an interpretable alternative for black box deep learning models. Classification depends on the extent to which a test image “looks like” a prototype...

However, perceptual similarity for humans can be different from the similarity learnt by the model. A user is unaware of the underlying classification strategy and does not know which image characteristics (e.g., color or shape) is the dominant characteristic for the decision. We address this ambiguity and argue that prototypes should be explained. Only visualizing prototypes can be insufficient for understanding what a prototype exactly represents, and