Stochastic Gradient Boosting with XGBoost and scikit-learn in Python

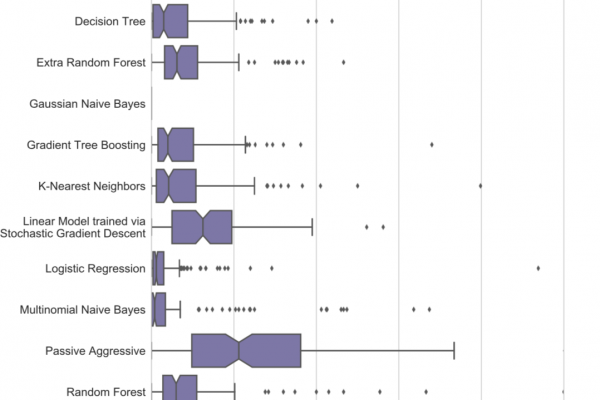

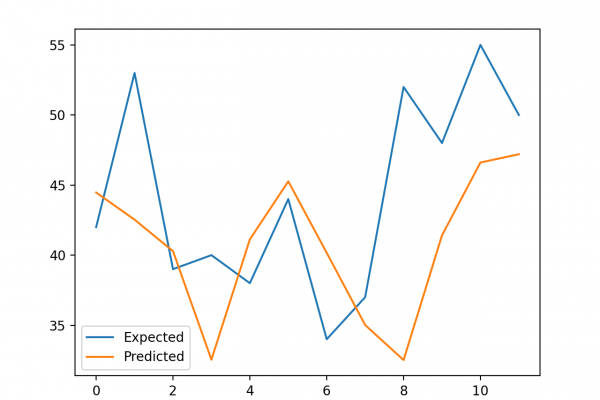

Last Updated on August 27, 2020 A simple technique for ensembling decision trees involves training trees on subsamples of the training dataset. Subsets of the the rows in the training data can be taken to train individual trees called bagging. When subsets of rows of the training data are also taken when calculating each split point, this is called random forest. These techniques can also be used in the gradient tree boosting model in a technique called stochastic gradient boosting. […]

Read more