How to Evaluate Gradient Boosting Models with XGBoost in Python

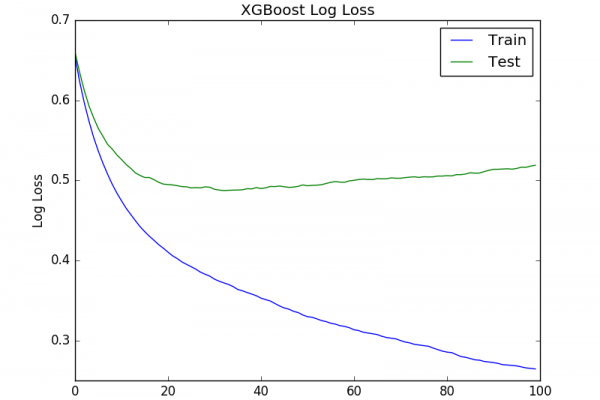

Last Updated on August 27, 2020 The goal of developing a predictive model is to develop a model that is accurate on unseen data. This can be achieved using statistical techniques where the training dataset is carefully used to estimate the performance of the model on new and unseen data. In this tutorial you will discover how you can evaluate the performance of your gradient boosting models with XGBoost in Python. After completing this tutorial, you will know. How to evaluate […]

Read more