Statistics for Evaluating Machine Learning Models

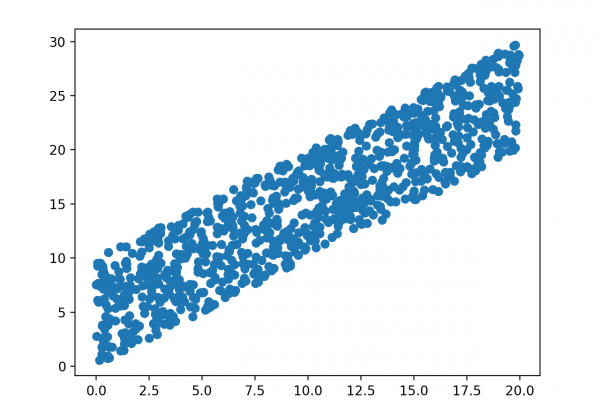

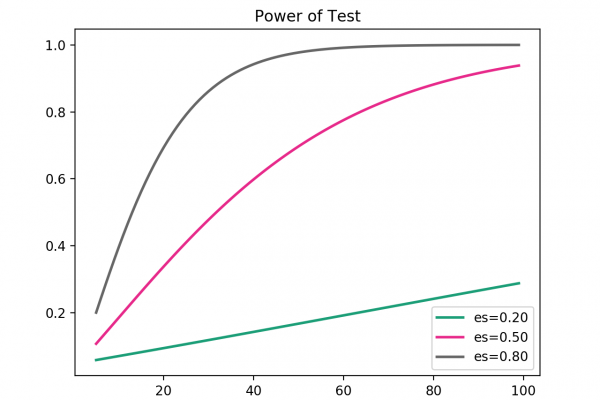

Last Updated on August 14, 2020 Tom Mitchell’s classic 1997 book “Machine Learning” provides a chapter dedicated to statistical methods for evaluating machine learning models. Statistics provides an important set of tools used at each step of a machine learning project. A practitioner cannot effectively evaluate the skill of a machine learning model without using statistical methods. Unfortunately, statistics is an area that is foreign to most developers and computer science graduates. This makes the chapter in Mitchell’s seminal machine […]

Read more