How to Calculate Nonparametric Statistical Hypothesis Tests in Python

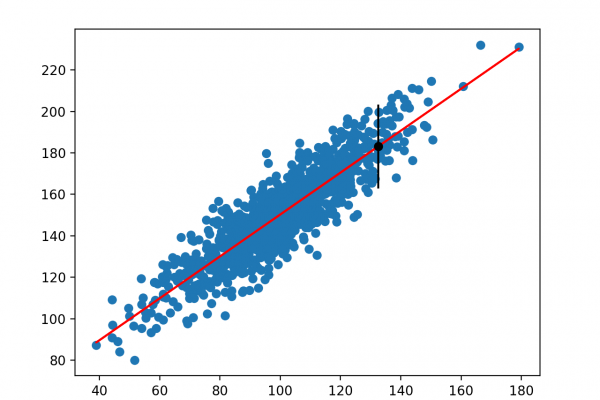

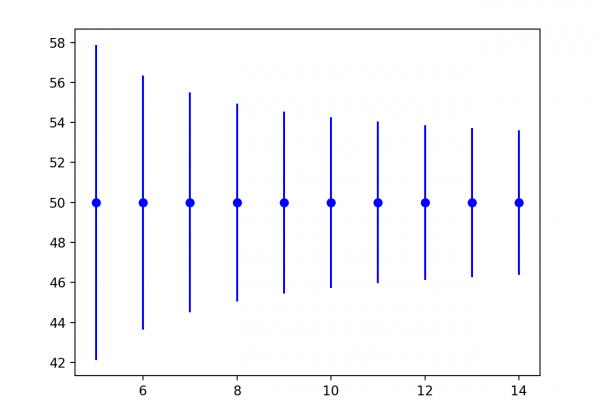

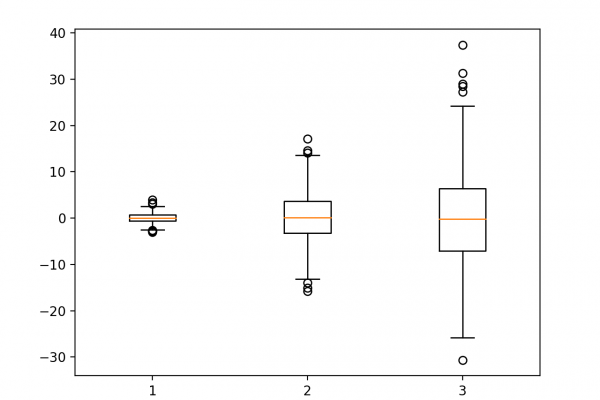

Last Updated on August 8, 2019 In applied machine learning, we often need to determine whether two data samples have the same or different distributions. We can answer this question using statistical significance tests that can quantify the likelihood that the samples have the same distribution. If the data does not have the familiar Gaussian distribution, we must resort to nonparametric version of the significance tests. These tests operate in a similar manner, but are distribution free, requiring that real […]

Read more