4 Types of Classification Tasks in Machine Learning

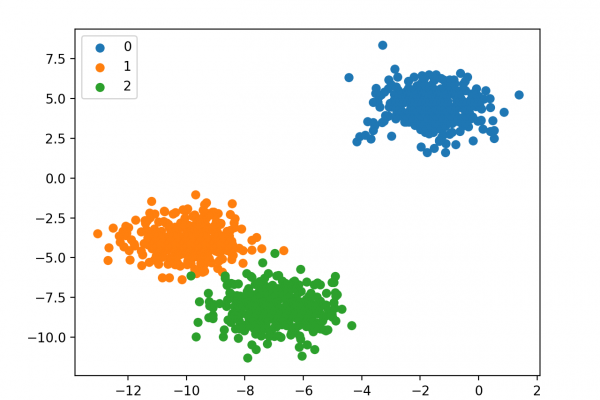

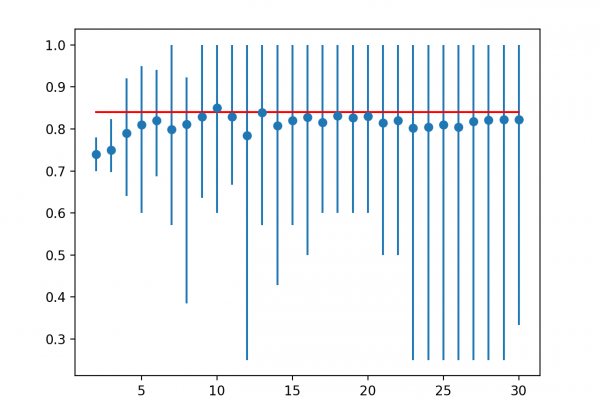

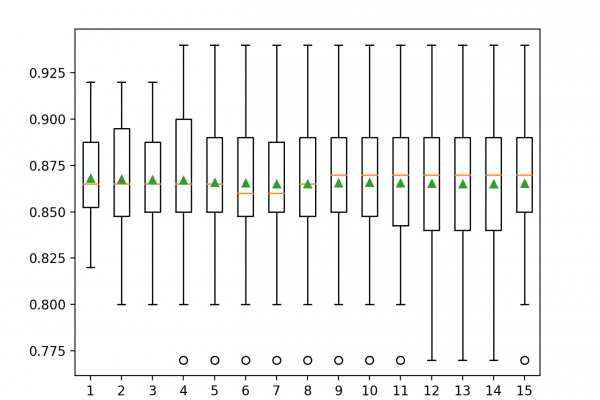

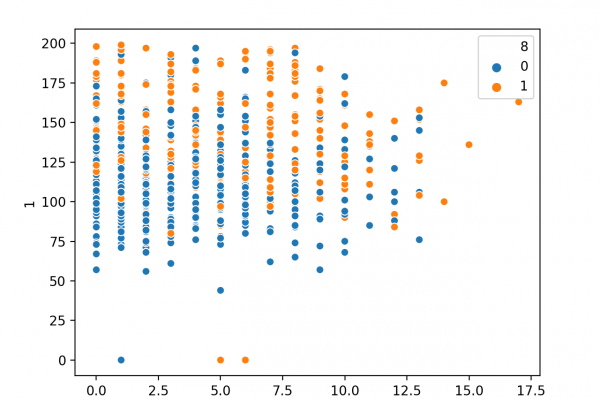

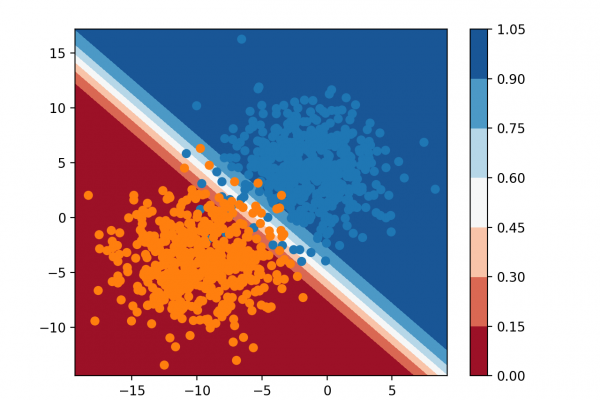

Last Updated on August 19, 2020 Machine learning is a field of study and is concerned with algorithms that learn from examples. Classification is a task that requires the use of machine learning algorithms that learn how to assign a class label to examples from the problem domain. An easy to understand example is classifying emails as “spam” or “not spam.” There are many different types of classification tasks that you may encounter in machine learning and specialized approaches to […]

Read more