Gradient Descent With AdaGrad From Scratch

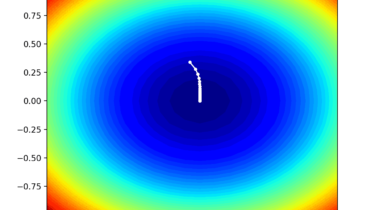

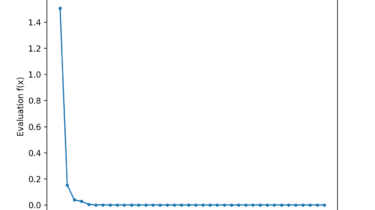

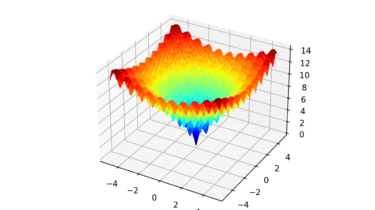

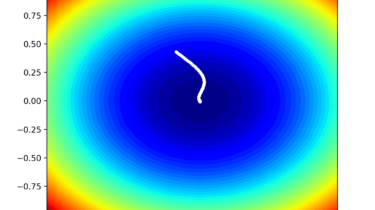

Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation of gradient descent is that it uses the same step size (learning rate) for each input variable. This can be a problem on objective functions that have different amounts of curvature in different dimensions, and in turn, may require a different sized step to a new point. Adaptive Gradients, or AdaGrad for short, is […]

Read more