How to Reduce Generalization Error With Activity Regularization in Keras

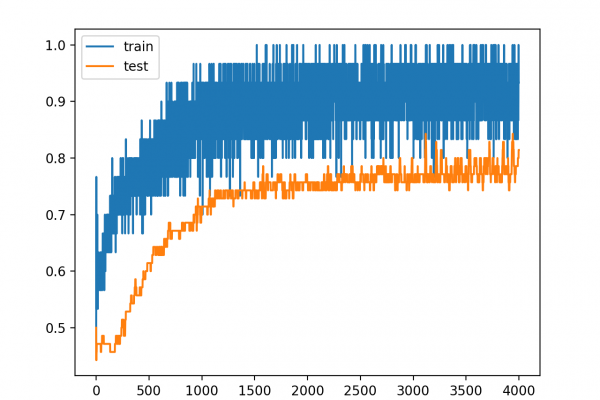

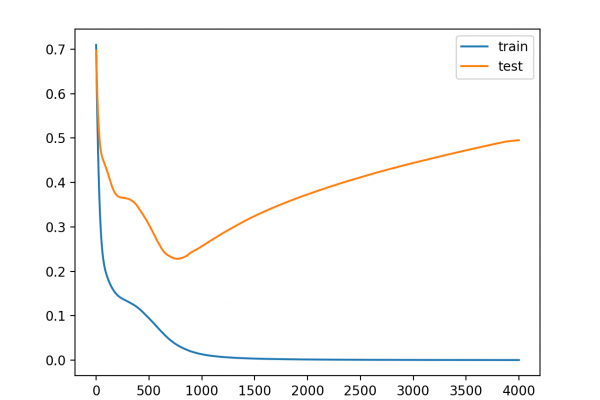

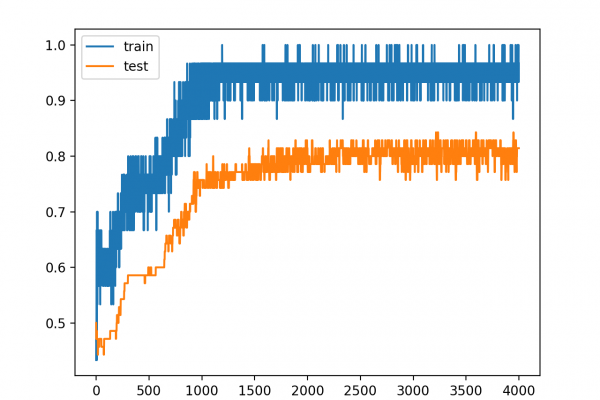

Last Updated on August 25, 2020 Activity regularization provides an approach to encourage a neural network to learn sparse features or internal representations of raw observations. It is common to seek sparse learned representations in autoencoders, called sparse autoencoders, and in encoder-decoder models, although the approach can also be used generally to reduce overfitting and improve a model’s ability to generalize to new observations. In this tutorial, you will discover the Keras API for adding activity regularization to deep learning […]

Read more