Stacking Ensemble for Deep Learning Neural Networks in Python

Last Updated on August 28, 2020

Model averaging is an ensemble technique where multiple sub-models contribute equally to a combined prediction.

Model averaging can be improved by weighting the contributions of each sub-model to the combined prediction by the expected performance of the submodel. This can be extended further by training an entirely new model to learn how to best combine the contributions from each submodel. This approach is called stacked generalization, or stacking for short, and can result in better predictive performance than any single contributing model.

In this tutorial, you will discover how to develop a stacked generalization ensemble for deep learning neural networks.

After completing this tutorial, you will know:

- Stacked generalization is an ensemble method where a new model learns how to best combine the predictions from multiple existing models.

- How to develop a stacking model using neural networks as a submodel and a scikit-learn classifier as the meta-learner.

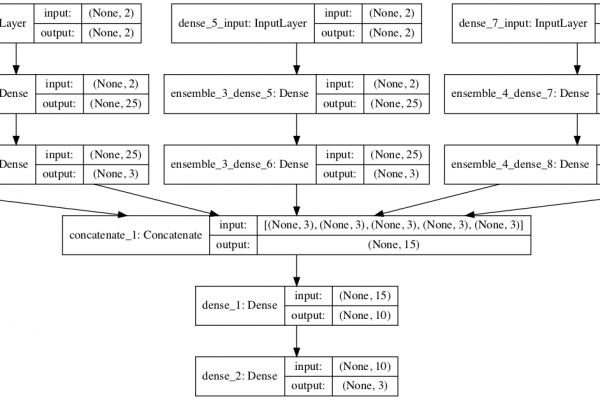

- How to develop a stacking model where neural network sub-models are embedded in a larger stacking ensemble model for training and prediction.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all

To finish reading, please visit source site