ROC Curves and Precision-Recall Curves for Imbalanced Classification

Last Updated on September 16, 2020

Most imbalanced classification problems involve two classes: a negative case with the majority of examples and a positive case with a minority of examples.

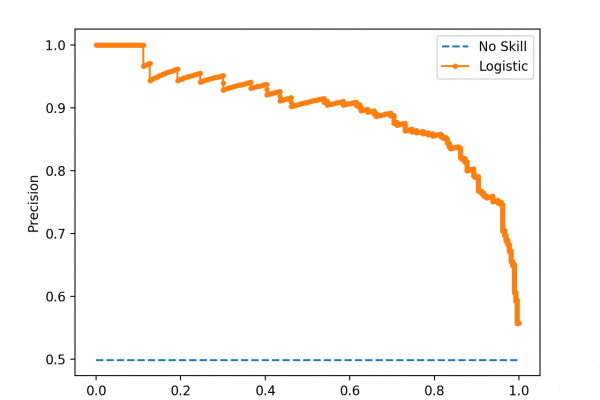

Two diagnostic tools that help in the interpretation of binary (two-class) classification predictive models are ROC Curves and Precision-Recall curves.

Plots from the curves can be created and used to understand the trade-off in performance for different threshold values when interpreting probabilistic predictions. Each plot can also be summarized with an area under the curve score that can be used to directly compare classification models.

In this tutorial, you will discover ROC Curves and Precision-Recall Curves for imbalanced classification.

After completing this tutorial, you will know:

- ROC Curves and Precision-Recall Curves provide a diagnostic tool for binary classification models.

- ROC AUC and Precision-Recall AUC provide scores that summarize the curves and can be used to compare classifiers.

- ROC Curves and ROC AUC can be optimistic on severely imbalanced classification problems with few samples of the minority class.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.