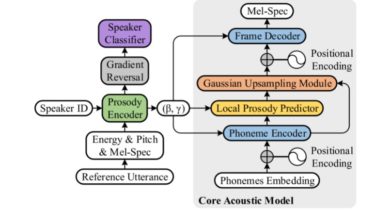

Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

Daft-Exprt – PyTorch Implementation

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

The validation logs up to 70K of synthesized mel and alignment are shown below (VCTK_val_p237-088).

DATASET refers to the names of datasets such as VCTK in the following documents.

Dependencies

You can install the Python dependencies with

pip3 install -r requirements.txt

Also, Dockerfile is provided for Docker users.

Inference

You have to download the pretrained models and put them in output/ckpt/DATASET/.

For a multi-speaker TTS, run

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --speaker_id SPEAKER_ID --restore_step RESTORE_STEP --mode single --dataset DATASET --ref_audio REF_AUDIO

to synthesize speech with the style of input audio at REF_AUDIO. The