Rethinking Spatial Dimensions of Vision Transformers

Rethinking Spatial Dimensions of Vision Transformers

Byeongho Heo, Sangdoo Yun, Dongyoon Han, Sanghyuk Chun, Junsuk Choe, Seong Joon Oh | Paper

NAVER AI LAB

News

- Mar 30, 2021: Code & paper released

- Apr 2, 2021: PiT models with pretrained weights are added to timm repo. You can directly use PiT models with

timm>=0.4.7. - Jul 23, 2021: Accepted to ICCV 2021 as a poster session

Abstract

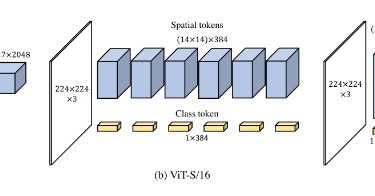

Vision Transformer (ViT) extends the application range of transformers from language processing to computer vision tasks as being an alternative architecture against the existing convolutional neural networks (CNN). Since the transformer-based architecture has been innovative for computer vision modeling, the design convention towards an effective architecture has been less studied yet. From the successful design principles of CNN, we investigate