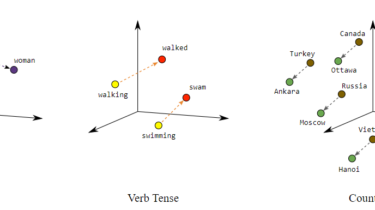

Represent word vectors in Natural Language Processing

We’ve all heard about word embedding in natural language processing, which is a term used for the representation of words for text analysis, typically in the form of a real-valued vector that encodes the meaning of the word such that the words that are closer in the vector space are expected to be similar in meaning.