Repeated k-Fold Cross-Validation for Model Evaluation in Python

Last Updated on August 26, 2020

The k-fold cross-validation procedure is a standard method for estimating the performance of a machine learning algorithm or configuration on a dataset.

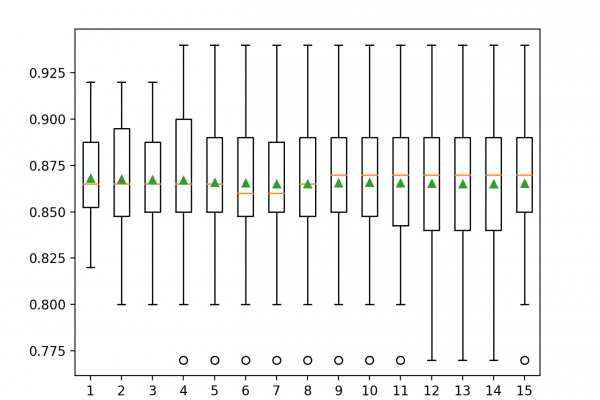

A single run of the k-fold cross-validation procedure may result in a noisy estimate of model performance. Different splits of the data may result in very different results.

Repeated k-fold cross-validation provides a way to improve the estimated performance of a machine learning model. This involves simply repeating the cross-validation procedure multiple times and reporting the mean result across all folds from all runs. This mean result is expected to be a more accurate estimate of the true unknown underlying mean performance of the model on the dataset, as calculated using the standard error.

In this tutorial, you will discover repeated k-fold cross-validation for model evaluation.

After completing this tutorial, you will know:

- The mean performance reported from a single run of k-fold cross-validation may be noisy.

- Repeated k-fold cross-validation provides a way to reduce the error in the estimate of mean model performance.

- How to evaluate machine learning models using repeated k-fold cross-validation in Python.

Kick-start your project with my new book Machine Learning Mastery With Python, including

To finish reading, please visit source site