Python code for ICLR 2022 spotlight paper EViT: Expediting Vision Transformers via Token Reorganizations

This repository contains PyTorch evaluation code, training code and pretrained EViT models for the ICLR 2022 Spotlight paper:

Not All Patches are What You Need: Expediting Vision Transformers via Token Reorganizations

Youwei Liang, Chongjian Ge, Zhan Tong, Yibing Song, Jue Wang, Pengtao Xie

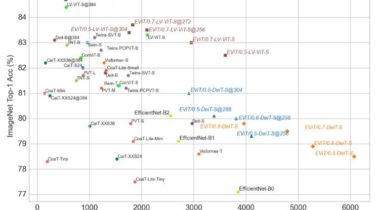

The proposed EViT models obtain competitive tradeoffs in terms of speed / precision:

If you use this code for a paper please cite:

@inproceedings{liang2022evit,

title={Not All Patches are What You