How to Use Feature Extraction on Tabular Data for Machine Learning

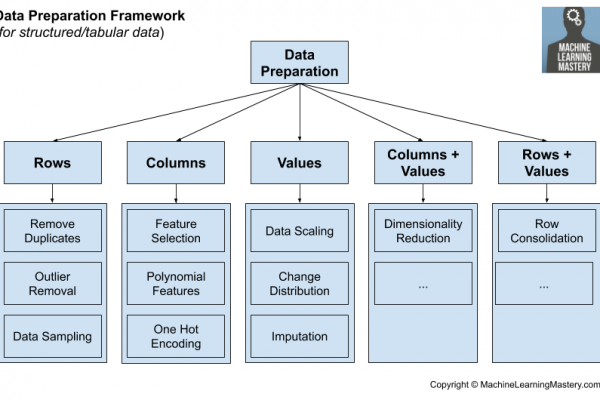

Last Updated on August 17, 2020 Machine learning predictive modeling performance is only as good as your data, and your data is only as good as the way you prepare it for modeling. The most common approach to data preparation is to study a dataset and review the expectations of a machine learning algorithm, then carefully choose the most appropriate data preparation techniques to transform the raw data to best meet the expectations of the algorithm. This is slow, expensive, […]

Read more