How to Calculate Nonparametric Rank Correlation in Python

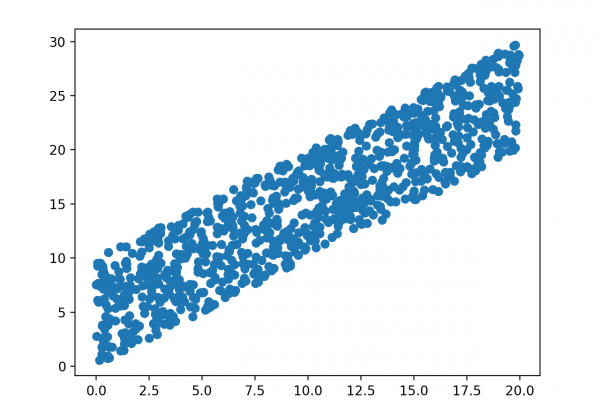

Last Updated on August 8, 2019 Correlation is a measure of the association between two variables. It is easy to calculate and interpret when both variables have a well understood Gaussian distribution. When we do not know the distribution of the variables, we must use nonparametric rank correlation methods. In this tutorial, you will discover rank correlation methods for quantifying the association between variables with a non-Gaussian distribution. After completing this tutorial, you will know: How rank correlation methods work […]

Read more