CNN Long Short-Term Memory Networks

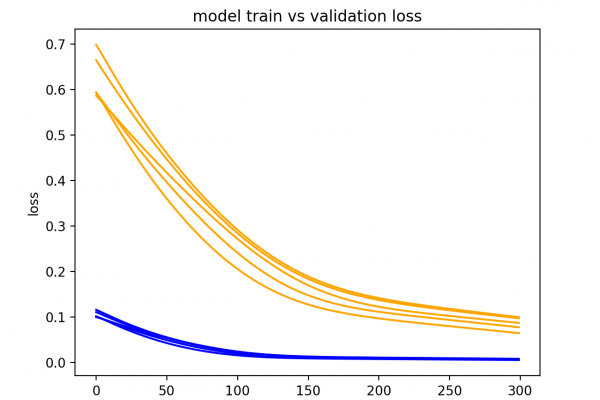

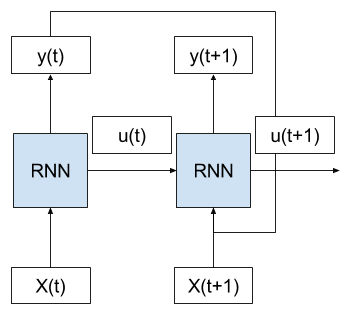

Last Updated on August 14, 2019 Gentle introduction to CNN LSTM recurrent neural networkswith example Python code. Input with spatial structure, like images, cannot be modeled easily with the standard Vanilla LSTM. The CNN Long Short-Term Memory Network or CNN LSTM for short is an LSTM architecture specifically designed for sequence prediction problems with spatial inputs, like images or videos. In this post, you will discover the CNN LSTM architecture for sequence prediction. After completing this post, you will know: […]

Read more