On the Suitability of Long Short-Term Memory Networks for Time Series Forecasting

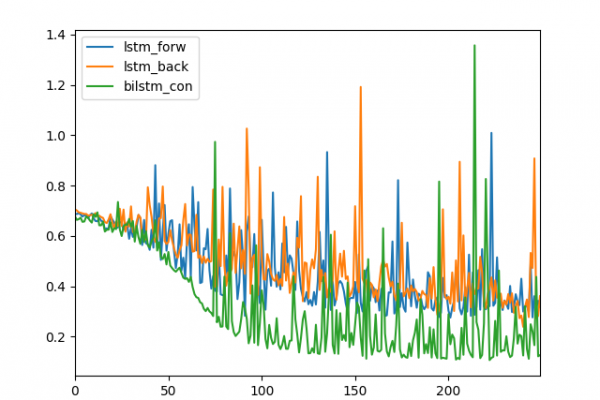

Last Updated on August 5, 2019 Long Short-Term Memory (LSTM) is a type of recurrent neural network that can learn the order dependence between items in a sequence. LSTMs have the promise of being able to learn the context required to make predictions in time series forecasting problems, rather than having this context pre-specified and fixed. Given the promise, there is some doubt as to whether LSTMs are appropriate for time series forecasting. In this post, we will look at […]

Read more