Feature Selection for Time Series Forecasting with Python

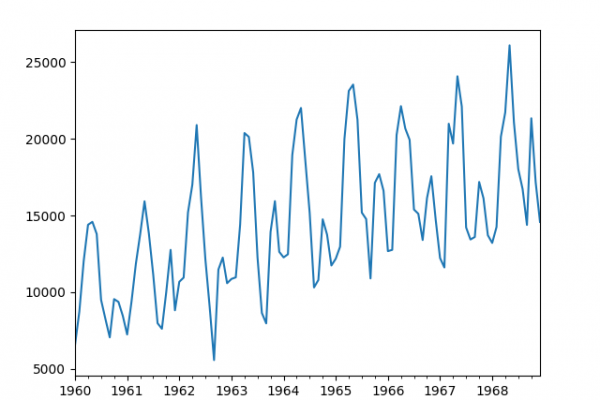

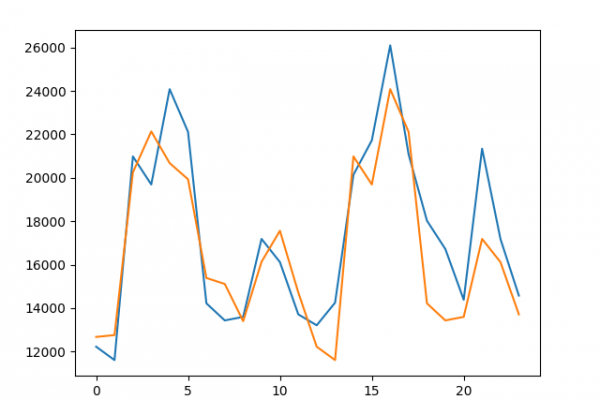

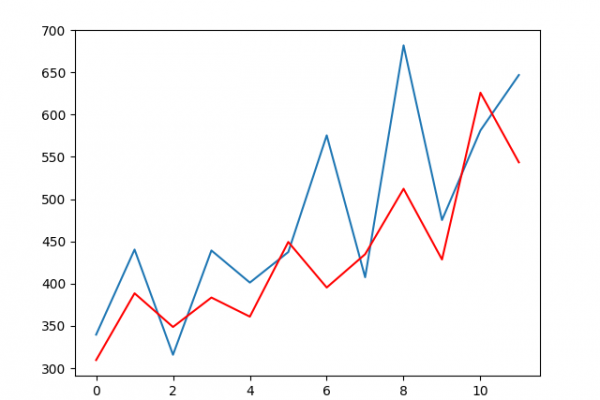

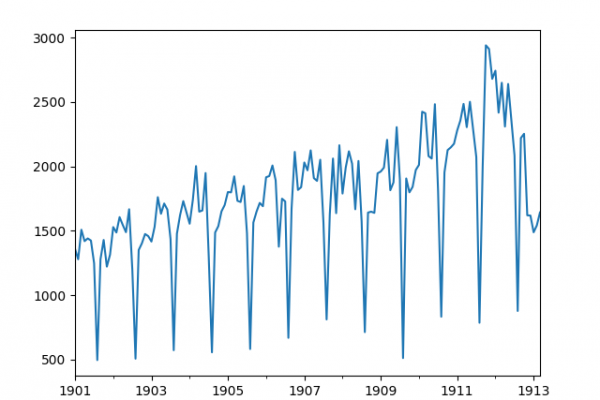

Last Updated on September 16, 2020 The use of machine learning methods on time series data requires feature engineering. A univariate time series dataset is only comprised of a sequence of observations. These must be transformed into input and output features in order to use supervised learning algorithms. The problem is that there is little limit to the type and number of features you can engineer for a time series problem. Classical time series analysis tools like the correlogram can […]

Read more