Standard Machine Learning Datasets To Practice in Weka

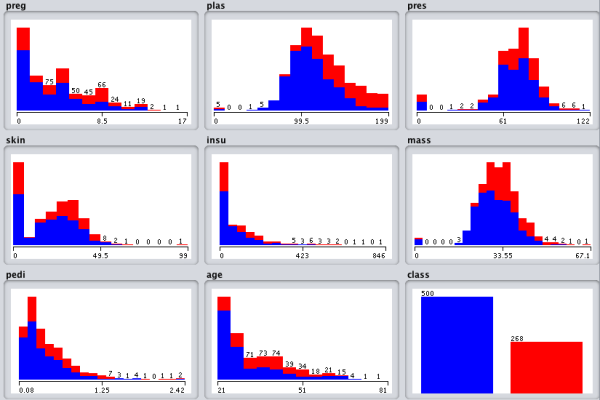

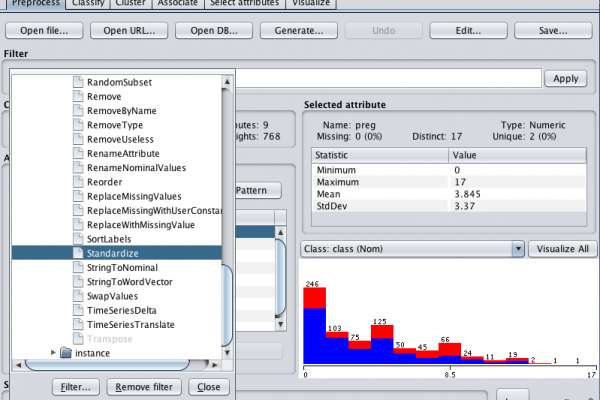

Last Updated on December 11, 2019 It is a good idea to have small well understood datasets when getting started in machine learning and learning a new tool. The Weka machine learning workbench provides a directory of small well understood datasets in the installed directory. In this post you will discover some of these small well understood datasets distributed with Weka, their details and where to learn more about them. We will focus on a handful of datasets of differing […]

Read more