Linear Algebra for Machine Learning

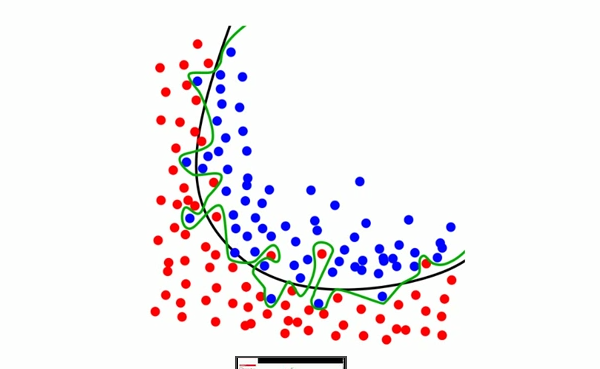

Last Updated on August 15, 2020 You do not need to learn linear algebra before you get started in machine learning, but at some time you may wish to dive deeper. In fact, if there was one area of mathematics I would suggest improving before the others, it would be linear algebra. It will give you the tools to help you with the other areas of mathematics required to understand and build better intuitions for machine learning algorithms. In this […]

Read more