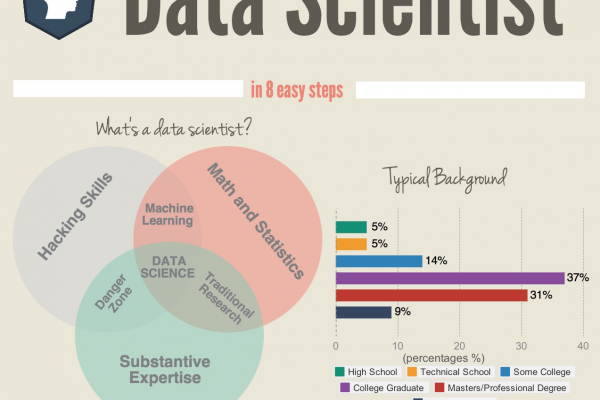

Hello World of Applied Machine Learning

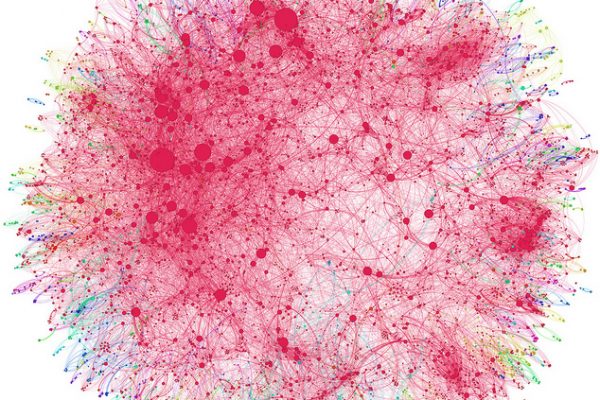

Last Updated on September 5, 2016 It is easy to feel overwhelmed with the large numbers of machine learning algorithms. There are so many to choose from, it is hard to know where to start and what to try. The choice can be paralyzing. You need to get over this fear and start. There is no magic book or course that is going to tell you what algorithm to use and when. In fact, in practice you cannot know this […]

Read more