Prepare Data for Machine Learning in Python with Pandas

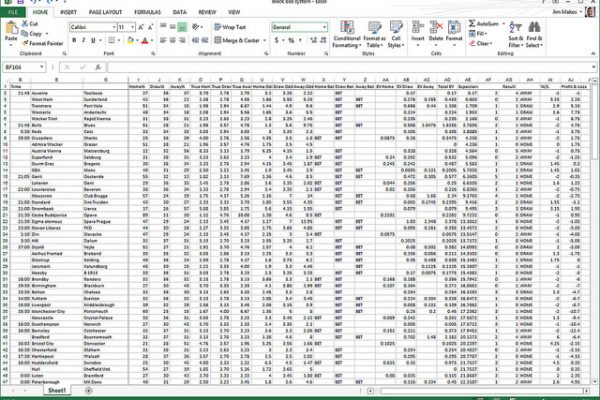

Last Updated on August 15, 2020 If you are using the Python stack for studying and applying machine learning, then the library that you will want to use for data analysis and data manipulation is Pandas. This post gives you a quick introduction to the Pandas library and point you in the right direction for getting started. Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all […]

Read more