Line Search Optimization With Python

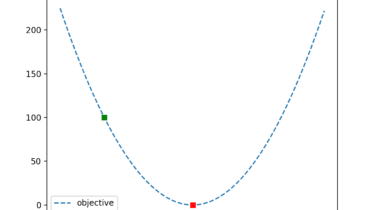

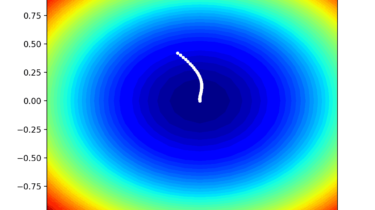

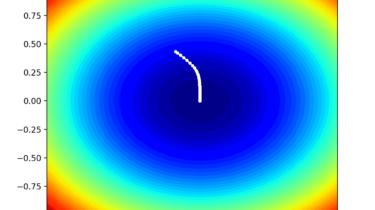

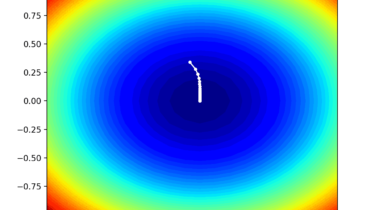

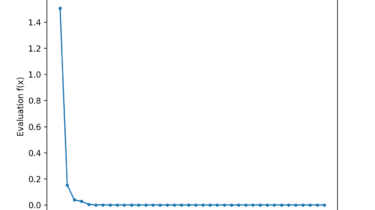

The line search is an optimization algorithm that can be used for objective functions with one or more variables. It provides a way to use a univariate optimization algorithm, like a bisection search on a multivariate objective function, by using the search to locate the optimal step size in each dimension from a known point to the optima. In this tutorial, you will discover how to perform a line search optimization in Python. After completing this tutorial, you will know: […]

Read more