Anomalous diffusion in nonlinear transformations of the noisy voter model

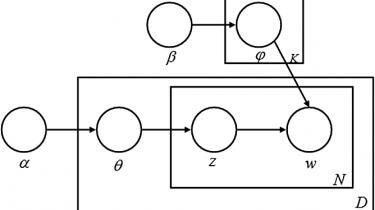

Voter models are well known in the interdisciplinary community, yet they haven’t been studied from the perspective of anomalous diffusion. In this paper we show that the original voter model exhibits ballistic regime… Non-linear transformations of the observation variable and time scale allows us to observe other regimes of anomalous diffusion as well as normal diffusion. We show that numerical simulation results coincide with derived analytical approximations describing the temporal evolution of the raw moments. (read more) PDF

Read more