Create your Own Image Caption Generator using Keras!

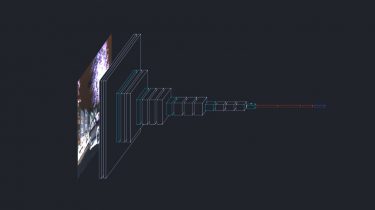

Overview Understand how image caption generator works using the encoder-decoder Know how to create your own image caption generator using Keras Introduction Image caption Generator is a popular research area of Artificial Intelligence that deals with image understanding and a language description for that image. Generating well-formed sentences requires both syntactic and semantic understanding of the language. Being able to describe the content of an image using accurately formed sentences is a very challenging task, but it could also […]

Read more