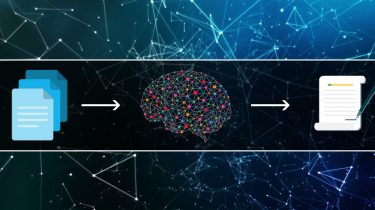

An Introduction to Text Summarization using the TextRank Algorithm (with Python implementation)

Introduction Text Summarization is one of those applications of Natural Language Processing (NLP) which is bound to have a huge impact on our lives. With growing digital media and ever growing publishing – who has the time to go through entire articles / documents / books to decide whether they are useful or not? Thankfully – this technology is already here. Have you come across the mobile app inshorts? It’s an innovative news app that converts news articles into a […]

Read more