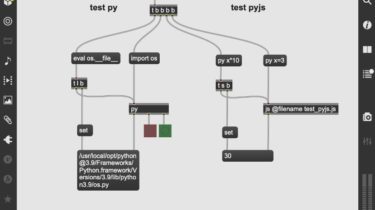

An Active Automata Learning Library Written in Python

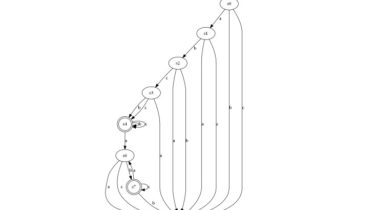

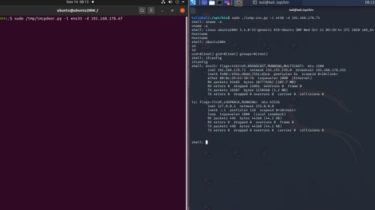

AALpy AALpy is a light-weight active automata learning library written in pure Python. By implementing a single method and a few lines of configuration, you can start learning automata. Whether you work with regular languages or you would like to learn models of reactive systems, AALpy supports a wide range of modeling formalisms, including deterministic, non-deterministic, and stochastic automata. You can use it to learn deterministic finite automata, Moore machines, and Mealy machines of deterministic systems. If the system that […]

Read more