A Legate library that aims to provide a distributed and accelerated drop-in replacement

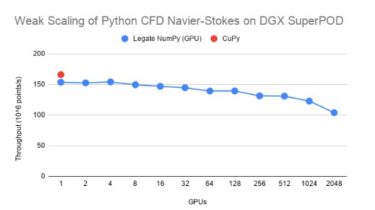

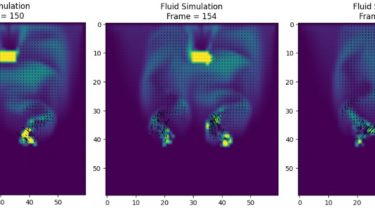

Legate NumPy Legate NumPy is a Legate library that aims to provide a distributed and accelerated drop-in replacement for the NumPy API on top of the Legion runtime. Using Legate NumPy you do things like run the final example of the Python CFD course completely unmodified on 2048 A100 GPUs in a DGX SuperPOD and achieve good weak scaling. Legate NumPy works best for programs that have very large arrays of data that cannot fit in the memory of a […]

Read more