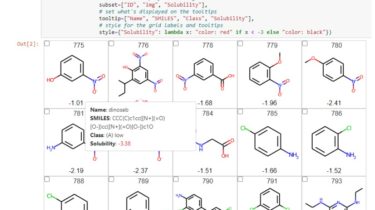

A california coronavirus scrapers are written using Python

california-coronavirus-scrapers An experiment in open-sourcing the web scrapers that feed the Los Angeles Times’ California coronavirus tracker. The scrapers are written using Python and Jupyter notebooks, scheduled and run via GitHub Actions and then archived using git. Installation Clone the repository and install the Python dependencies. pipenv install Run all of the scraper commands. make Run one of the scraper commands. make -f vaccine-doses-on-hand/Makefile GitHub https://github.com/datadesk/california-coronavirus-scrapers

Read more