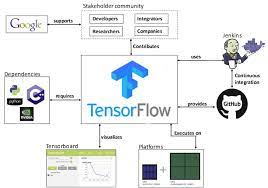

How TensorFlow Works?

Tensor Flow permits the subsequent: Tensor Flow helps you to deploy computation to as a minimum one or extra CPUs or GPUs in a computing tool, server, or mobile device in a completely easy manner. This way the matters may be completed very speedy. Tensor Flow lets you specific your computation as a statistics glide graph. Tensor Flow helps you to visualize the graph using the in-constructed tensor board. You can test and debug the graph very without difficulty. Tensor […]

Read more