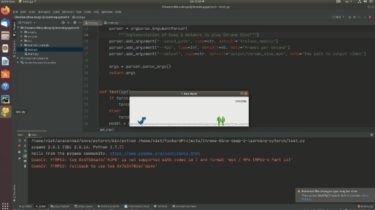

A Python wrapper for ngrok

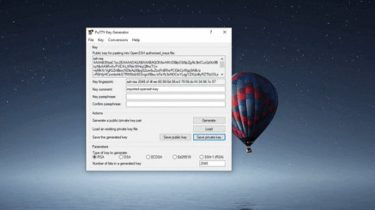

pyngrok pyngrok is a Python wrapper for ngrok that manages its own binary and puts it on your path, making ngrok readily available from anywhere on the command line and via a convenient Python API. ngrok is a reverse proxy tool that opens secure tunnels from public URLs to localhost, perfect for exposing local web servers, building webhook integrations, enabling SSH access, testing chatbots, demoing from your own machine, and more, and its made even more powerful with native Python […]

Read more