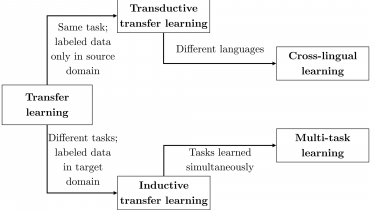

EMNLP 2018 Highlights: Inductive bias, cross-lingual learning, and more

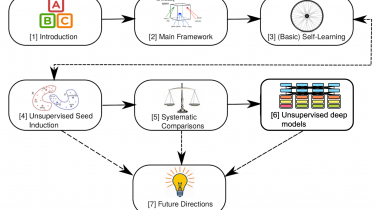

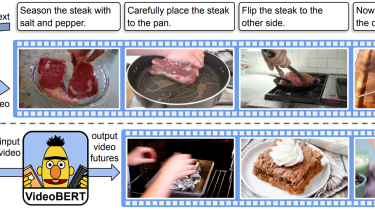

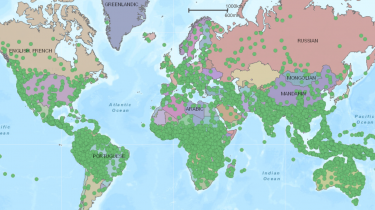

The post discusses highlights of the 2018 Conference on Empirical Methods in Natural Language Processing (EMNLP 2018). This post originally appeared at the AYLIEN blog. You can find past highlights of conferences here. You can find all 549 accepted papers in the EMNLP proceedings. In this review, I will focus on papers that relate to the following topics: Inductive bias The inductive bias of a machine learning algorithm is the set of assumptions that the model makes in order to […]

Read more