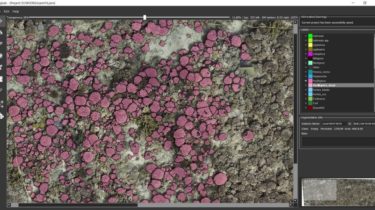

A CNN based image segmentation tool oriented to marine data analysis

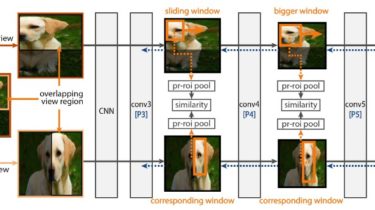

TagLab TagLab was created to support the activity of annotation and extraction of statistical data from ortho-maps of benthic communities. The tool includes different types of CNN-based segmentation networks specially trained for agnostic (relative only to contours) or semantic (also related to species) recognition of corals. Interaction TagLab allows to : zoom and navigate a large map using (zoom/mouse wheel, pan/’Move’ tool selected + left button). With every other tool selected the pan is activated with ctrl + left button […]

Read more