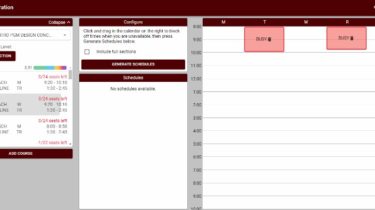

Automatic class scheduler for Texas A&M written with Python+Django and React+Typescript

Rev Registration Rev Registration is an automatic class scheduler for Texas A&M, aimed at easing the process of course registration by generating compatible schedules given the courses a student would like to take and what preferences they have. Simply select a term, pick your courses, mark off when you’re not available, and we’ll generate schedules for you! For instance, imagine you’ve settled on 3 sections of a course you are fine with having and are having trouble finding a schedule […]

Read more