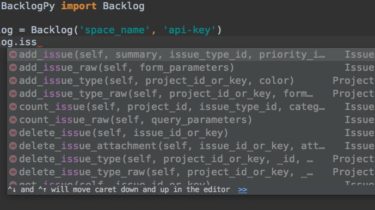

Backlog API v2 Client Library for Python

BacklogPy BacklogPy is Backlog API v2 Client Library for Python 2/3 Install You can install the client library with pip: $ pip install BacklogPy Example The client Library has API Call methods for All Backlog v2 API: >>> from BacklogPy import Backlog >>> backlog = Backlog(‘space_name’,’api-key’) >>> response = backlog.get_project_list(all=True, archived=True) >>> print(response.json()[0]) {‘archived’: False, ‘chartEnabled’: True, ‘displayOrder’: 1234563786, ‘id’: 12345, ‘name’: ‘Coffee Project’, ‘projectKey’: ‘COFFEE_PROJECT’, ‘projectLeaderCanEditProjectLeader’: True, ‘subtaskingEnabled’: False, ‘textFormattingRule’: ‘markdown’, ‘useWikiTreeView’: True} Also you can use dict parameters […]

Read more