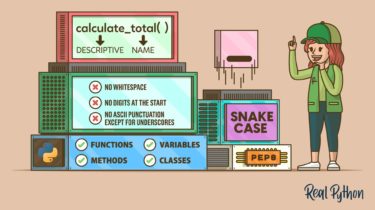

Quiz: Build a Blog Using Django, GraphQL, and Vue

Interactive Quiz ⋅ 8 QuestionsBy Philipp Acsany Share Or copy the link: Copied! Happy Pythoning! In this quiz, you’ll test your understanding of building a Django blog back end and a Vue front end, using GraphQL to communicate between them. You’ll revisit how to run the Django server and a Vue application on your computer at the same time. The quiz contains 8 questions and there is no time limit.

Read more