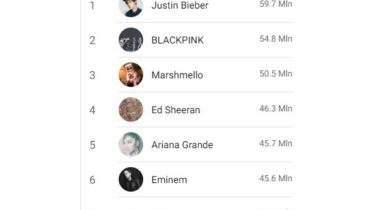

A bot that updates about the most subscribed artist’ channels on YouTube

Most Subscribed YouTube Music Channels This Python bot checks the most subscribed music artist channels on YouTube and makes a top chart. How to see the charts Web App You see the charts on the web app in a nice Material UI. The website can be “installed” as Web App on Android, iOS and Windows 10. It has the benefits of the app and the advantages of the web: it takes zero space in memory but it can also work […]

Read more