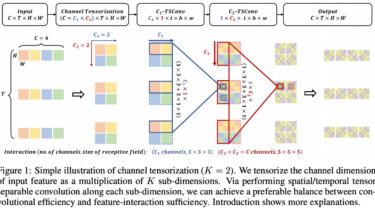

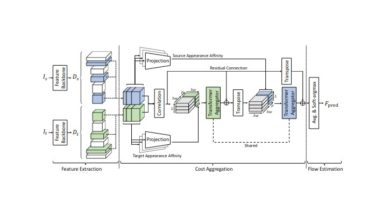

CT-Net: Channel Tensorization Network for Video Classification

CT-Net CT-Net: Channel Tensorization Network for Video Classification @inproceedings{ li2021ctnet, title={{{}CT{}}-Net: Channel Tensorization Network for Video Classification}, author={Kunchang Li and Xianhang Li and Yali Wang and Jun Wang and Yu Qiao}, booktitle={International Conference on Learning Representations}, year={2021}, url={https://openreview.net/forum?id=UoaQUQREMOs} } Overview [2021/6/3] We release the PyTorch code of CT-Net. More details and models will be available. Model Zoo More models will be released in a month… Now we release the model for visualization, please download it from here and put it […]

Read more