Control and monitor your Unifi Protect Cameras from Home Assistant

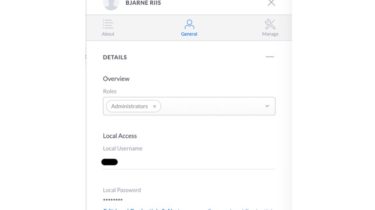

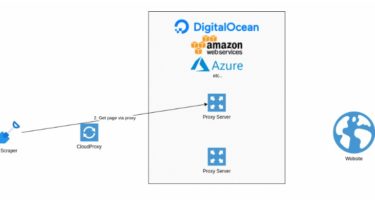

Unifi Protect for Home Assistant The Unifi Protect Integration adds support for retrieving Camera feeds and Sensor data from a Unifi Protect installation on either a Ubiquiti CloudKey+ ,Ubiquiti Unifi Dream Machine Pro or UniFi Protect Network Video Recorder. There is support for the following device types within Home Assistant: Camera A camera entity for each camera found on the NVR device will be created Sensor A sensor for each camera found will be created. This sensor will hold the […]

Read more