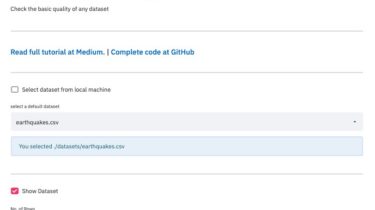

Data Quality Checker in Python

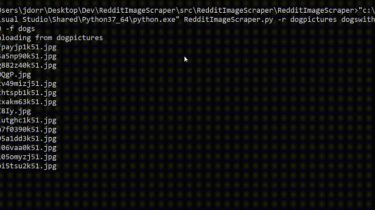

data-quality-checker Data Quality Checker in Python Check the basic quality of any dataset. Sneak Peek Requirements python 3.7 version streamlit 0.60 version pandas numpy matplotlib Usage Description for Local Run Install streamlit and other dependencies as mentioned in Requirements Clone the repository: git clonehttps://github.com/maladeep/palmerpenguins-streamlit-eda.git Run as streamlit run app.py OR Simply run the web app https://data-quality-checker.herokuapp.com/ GitHub https://github.com/maladeep/data-quality-checker

Read more